Monkeys with Brain Machine Brain Interface move and feel virtual objects

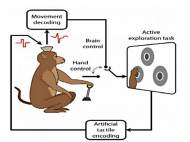

Two monkeys trained at the Duke University Center for Neuroengineering learned to employ brain activity in order to move an avatar hand and identify the texture of virtual objects. Unlike a research where a monkey used its brain to move a robotic arm, this is a first demonstration of the two-way interaction between a primate brain and a virtual body.

Two monkeys trained at the Duke University Center for Neuroengineering learned to employ brain activity in order to move an avatar hand and identify the texture of virtual objects. Unlike a research where a monkey used its brain to move a robotic arm, this is a first demonstration of the two-way interaction between a primate brain and a virtual body.

“This is the first demonstration of a brain-machine-brain interface (BMBI) that establishes a direct, bidirectional link between a brain and a virtual body”, said lead researcher Miguel Nicolelis, MD, PhD, professor of neurobiology at Duke University Medical Center and co-director of the Duke University Center for Neuroengineering. “This is also the first time we’ve observed a brain controlling a virtual arm that explores objects while the brain simultaneously receives electrical feedback signals that describe the fine texture of objects ‘touched’ by the monkey’s newly acquired virtual hand.”

Instead using muscles in their bodies, the monkeys used their electrical brain activity to direct the virtual hands of an avatar to the surface of virtual objects and, upon contact, were able to differentiate their textures.

The virtual objects used in the experiments were visually identical, but they were designed to have different artificial textures that could only be detected if the animals explored them with virtual hands controlled directly by their brain’s electrical activity. The texture of the virtual objects corresponds to one if three different electrical patterns of minute electrical signals transmitted to the monkey’s brains.

The combined electrical activity of populations of 50 to 200 neurons in the monkey’s motor cortex controlled the steering of the avatar arm, while thousands of neurons in the primary tactile cortex were simultaneously receiving continuous electrical feedback from the virtual hand’s palm that let the monkey discriminate between objects, based on their texture alone.

The findings could pave the way for future robotic exoskeletons suited for severely paralyzed patients, and the sensors distributed across the exoskeleton would generate the type of tactile feedback needed for the patient’s brain to identify the texture, shape and temperature of objects, as well as many features of the surface upon which they walk.

This overall therapeutic approach is the one chosen by the Walk Again Project, an international, non-profit consortium, established by a team of Brazilian, American, Swiss, and German scientists, which aims at restoring full body mobility to quadriplegic patients through a brain-machine-brain interface implemented in conjunction with a full-body robotic exoskeleton.

For more information, read the article published in the journal Nature named: “Active tactile exploration using a brain–machine–brain interface”.

Leave your response!