RoboEarth enables robots to share their knowledge over Internet

A group of about 35 people, part of the European project RoboEarth, is currently creating a worldwide, open-source platform that allows any robot with a network connection to generate, share, and reuse data. RoboEarth is developed in hope that it will greatly speed up robot learning and adaptation in complex tasks, as well as providing robots with possibility to execute tasks that were not explicitly planned for at design time.

A group of about 35 people, part of the European project RoboEarth, is currently creating a worldwide, open-source platform that allows any robot with a network connection to generate, share, and reuse data. RoboEarth is developed in hope that it will greatly speed up robot learning and adaptation in complex tasks, as well as providing robots with possibility to execute tasks that were not explicitly planned for at design time.

For example, a service robot the hospital room is pre-programmed to serve a drink to a patient. A simple program that would perform the previously mentioned task would have to help the robot to locate the drink, navigate to its position, grasp it, pick it up, locate the patient, navigate to the patient, and finally serve the drink. During task execution some robots monitor and log their progress and continuously update and extend their rudimentary, pre-programmed world model with additional information. That is called machine learning.

The folks from RoboEarth want to make the robot which is not able to fulfill a task to ask a person for help and store any newly learned knowledge. After the task has been successfully performed, the robot would share its acquired knowledge by uploading it to a Web-style database. Some time later the same task could be required, and a second robot that has no prior knowledge on how to execute the task could be designated to perform it. The second robot could query the database for relevant information and download the knowledge previously collected by other robots.

Although differences between the two robots (e.g., due to wear and tear or different robot hardware) and their environments (e.g., due to changed object locations or a different hospital room) mean that the downloaded information may not be sufficient to allow this robot to re-perform a previously successful task, this information is still useful as a future reference and a starting point.

Recognized objects, such as the bed, can now provide occupancy information even for areas which are not directly observed. Detailed object models (e.g., of a cup) can increase the speed and reliability of the robot’s interactions. Task descriptions of previously successful actions (e.g., driving around the bed) can provide guidance on how the robot may be able to successfully perform its task.

This and other prior information (e.g., the previous location of the cup, the likely place to find the patient) can guide this second robot’s search and execution strategy. In addition, as the two robots continue to perform their tasks and pool their data, the quality of prior information will improve and begin to reveal underlying patterns and correlations about the robots and their environment.

“We believe that the availability of such prior information is a necessary condition for robots to operate in more complex, unstructured environments”, said René van de Molengraft, project’s coordinator at the Technical University of Eindhoven,. “Ultimately, the nuanced and complicated nature of human spaces can’t be summarized within a limited set of specifications.”

The RoboEarth team unites researchers from the University of Zaragoza, University of Stuttgart, Technical University of Munich, Technical University of Eindhoven, Philips Innovation Services and the ETH Zurich.

One year into the project, the team has successfully shown the download of task descriptions from RoboEarth and executed a simple task. The network is also already capable to accept simple uploads, such as an improved map of the environment, from a small number of robots.

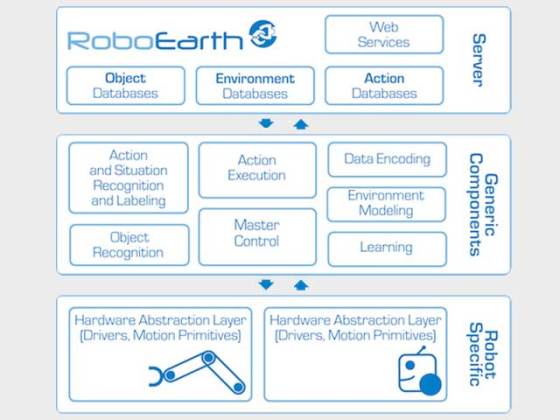

In an attempt to set standards, the RoboEarth Collaborators will also implement components for a ROS compatible, robot-unspecific, high-level operating system as well as components for robot-specific, low-level controllers accessible via a Hardware Abstraction Layer.

Although this method would allow robots to share their knowledge via Internet, outsource computation to the cloud, link the acquired data and potentially standardize the software used in robot programming, there are a few drawbacks which need to be considered. The robot developers would still need to develop robots “smart enough” for their own purpose because they could end up without the access to the Internet database (due to malfunction of a robot or the network, or unavailability). The database and communications protocol protection is another important issue, because it could lead to various (potentially dangerous) malicious acts from hackers.

In case they don’t work on protection of database and a good encryption of the protocol used, I believe this system could prove very dangerous since it is supposed to help the robots working in our environment.

hello. my name is hamidreza,I’m Mechatronic engineer.

Please say to me that what’s live surgery online and what online surgery with robot and Operating by remote control?

have good time!

by.

This is so cool, but I’m kind of worried about Skynet!! Pretty soon, we will have some great networked intelligence that we want us to be enslaved.

Inspired by the movie Tenku no Shiro Rapyuta (English title: Castle in the Sky) by Hayao Miyazaki, where Rapyuta is the castle in the sky inhabited by robots, RoboEarth released Rapyuta: The RoboEarth Cloud Engine.

Rapyuta is an open source cloud robotics platform for robots. It implements a Platform-as-a-Service (PaaS) framework designed specifically for robotics applications.

Rapyuta helps robots to offload heavy computation by providing secured customizable computing environments in the cloud. Robots can start their own computational environment, launch any computational node uploaded by the developer, and communicate with the launched nodes using the WebSockets protocol.

http://www.youtube.com/watch?v=4-ir1ieqKyc

Each robot connected to Rapyuta has a secured computing environment (rectangular boxes) giving them the ability to move their heavy computation into the cloud. Computing environments have a high bandwidth connection to the RoboEarth knowledge repository (stacked circular disks).

This allows robots to process data directly inside the computational environment in the cloud without the need for downloading and local processing. Furthermore, computing environments are tightly interconnected with each other. This paves the way for the deployment of robotic teams.